Kubernetes troubleshooting is the process of identifying, diagnosing, and resolving issues that may arise within a Kubernetes cluster, nodes, pods, or containers. This involves investigating and resolving problems related to resource allocation, networking, storage, and application performance, among other things. It also involves implementing preventive measures to avoid future issues and ensuring the continuous availability and optimal performance of Kubernetes components. The goal of Kubernetes troubleshooting is to minimize downtime, maintain high availability, and ensure the overall health of the Kubernetes infrastructure.

As Kubernetes is such a complex platform, there are potentially a lot of places you could look, to find out the root cause of the issue.

kubectl commands

kubectl is a powerful tool in Kubernetes troubleshooting, as it enables administrators to inspect and manage Kubernetes clusters from the command line. It allows for various tasks such as viewing logs, describing cluster resources, and executing commands within containers (get, describe, logs, and exec). Using kubectl effectively can help in identifying and resolving issues within a Kubernetes environment.

The official document below provides guidelines for executing kubectl commands.

https://kubernetes.io/docs/reference/kubectl/cheatsheet/

Analyzing logs - Pods

Analyzing logs is an important aspect of Kubernetes troubleshooting as it helps in identifying and resolving issues within a cluster. By examining the logs, administrators can gain insights into the behavior of the application and its components, such as containers, pods, and nodes. This information can be used to troubleshoot and debug problems related to performance, configuration, errors, and more. Additionally, logs can be used to monitor and audit the cluster, providing valuable insights into its operation and security.

Example:

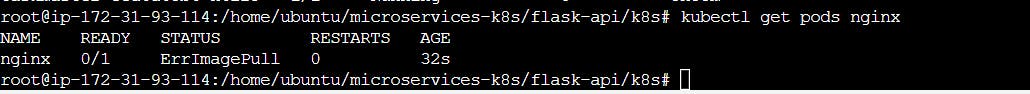

The pod.yml manifest file below contains an incorrect image name. Instead of nginx:1.14.2, I used nginxp:1.14.2

apiVersion: v1

kind: Pod

metadata:

name: nginx

spec:

containers:

- name: nginx

image: nginxp:1.14.2

ports:

- containerPort: 80

kubectl apply -f pod.yml

kubectl get pods nginx

kubectl describe pods nginx

Incorrect image name or tag: This usually occurs because the image name or tag was written erroneously in the pod manifest. Using the docker pull, confirm the right image name and update it in the pod manifest.

By analyzing the logs containing the error message and referring to the official Kubernetes documentation, we can resolve the issue at hand.

Analyzing Container logs

Debugging container images involves techniques such as running the container in interactive mode, using a shell to inspect the container's filesystem, and attaching a debugger to a running container. It helps identify and fix issues in the containerized application.

Example:

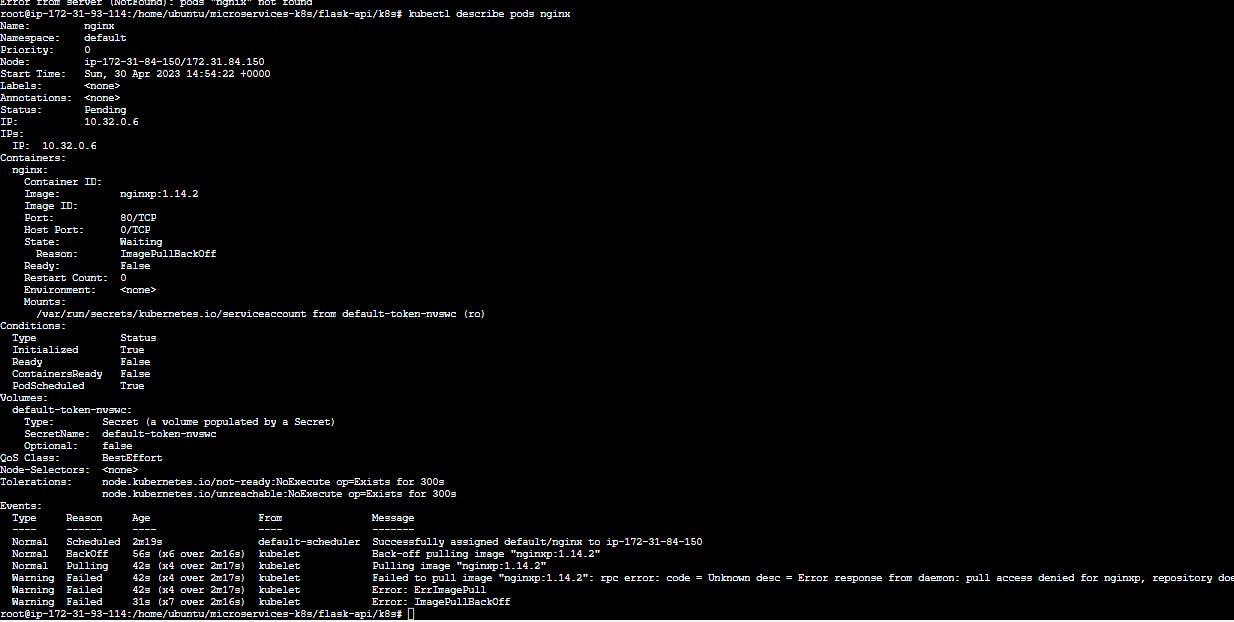

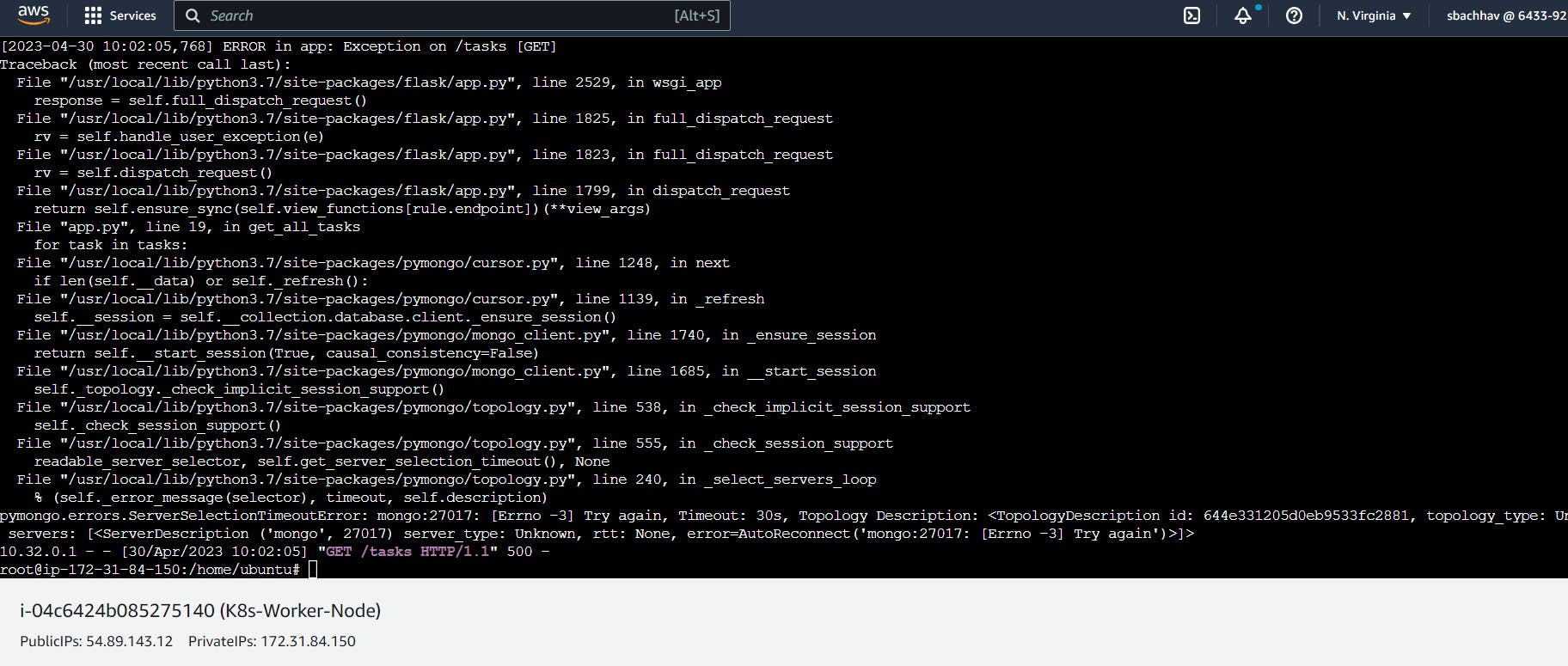

In the given example, deployed a Flask and MongoDB microservices project on Kubernetes with Kubeadm. There seem to be no apparent issues with the functionality of the Kubernetes pods, nodes, and services.

kubectl get nodes

kubectl get pods

kubectl get svc

When running the "docker ps" command on the worker node, it is indicating that the containers are functioning correctly.

docker ps

docker exec -it <CONTAINER-ID> bash

The "docker exec" command is working correctly for the MongoDB container.

Postman tool showing taskmaster pod is giving correct output.

http://<workder-node.ipaddress>:node-port

But When we checked to run get all tasks, we got the following Server 500 error:

We inspected the worker node for troubleshooting purposes.

docker logs c6f64f51fff8

The application container is unable to connect to the MongoDB container on port 27017, resulting in a ServerSelectionTimeoutError.

Conclusion

Through practical experience, we have learned how to troubleshoot and debug Kubernetes errors by analyzing logs using kubectl commands.

To be Continued .....

I plan to continually update my troubleshooting knowledge by documenting the errors encountered during Kubernetes hands-on projects along with their respective resolution steps.

Thanks and keep learning.

#Kubernetes #Devops #Trainwithshubham #Kubeweek #day7 #kubeweekchallenge #ContainerOrchestration #TechBlog #CloudNative